We have been involved in a few projects where there have been 100s of Bluetooth beacons in one place at the same time as opposed to, the more normal, 10s at a time. We have found detection times to be significantly longer. Until now, we have found it difficult to quantify this behaviour.

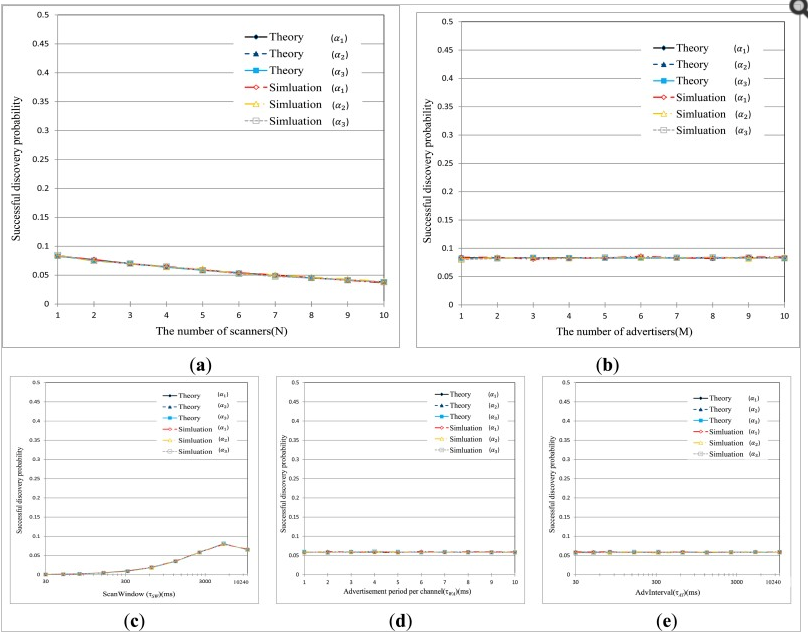

We have found some research, funded by Samsung, on the Analysis of Latency Performance of Bluetooth Low Energy (BLE) Networks. The research contains lots of theoretical predictions backed up with experimental data that show how the time to detect varies with the number of scanners, number of advertisers, the scan window size, the advertisement period and the advertising interval:

The research concludes that when the number of Bluetooth devices increases, delays in device discovery show an exponential growth even when using multiple advertising channels and small frame sizes.

The authors say:

“We find that the inappropriate parameter settings considerably impair the efficiency of BLE devices, and the wide range of BLE parameters provides high flexibility for BLE devices to be customized for different applications.”

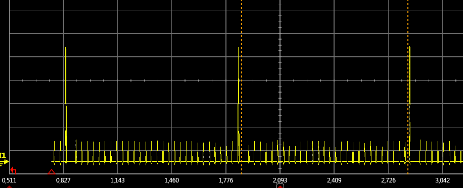

So what typical parameter settings might affect the detection time? What’s really going on? If you look at beacon advertising it transmits for about a millisecond every configurable advertising period (typically 100ms to 1 sec):

In simple terms, if two beacons happen to transmit on the same channel, at a similar time, then the transmissions will collide and receiving device will see corrupted data. The receiver will have to wait for its next scan to possibly see the beacon(s) and this increases the detection time. The chances of collision increase as the number of devices increase and decrease as the beacon advertising period increases.

Note that if two beacons collide and have the same advertising interval, it doesn’t mean they will collide next time. The advertising interval has a small amount of randomisation added to make this less likely to happen.