New research presents a framework for synchronising multi-user augmented reality (AR) sessions across multiple devices in shared environments using beacon-assisted technology. The conventional reliance on vision-based methods for AR synchronisation, such as Apple’s ARKit and Google’s ARCore, often fails in larger spaces or under changes in the visual environment. To overcome this, the study introduces two alternative methods using location beacon technologies: BLE-assist and UWB-assist synchronisation.

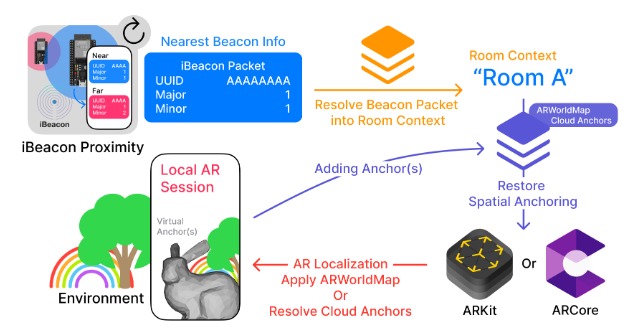

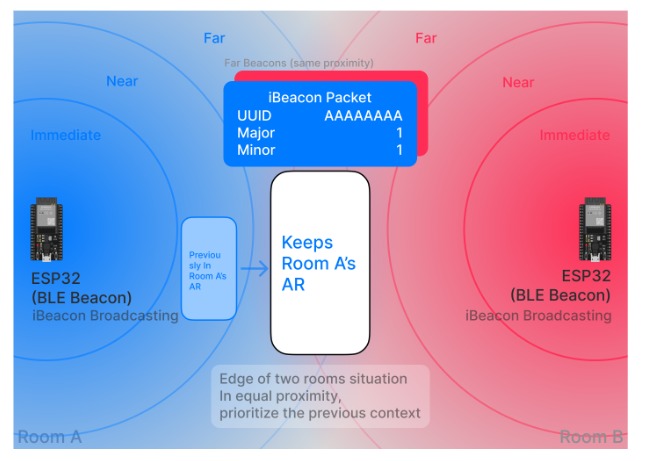

The BLE-assist method uses iBeacon broadcasts to determine the user’s room context and integrates this with existing AR anchoring frameworks. By breaking down large areas into room-sized contexts, this approach allows ARWorldMaps or Cloud Anchors to be managed in a more localised and efficient manner. It achieves high positional accuracy, but its performance drops under significant environmental changes.

On the other hand, the UWB-assist method uses ultra-wideband beacons and the device’s azimuth reading to create a fixed spatial reference. This allows persistent anchoring across sessions, with consistent resolution success even in varied physical surroundings. Although this method does not offer the same fine-grained accuracy as BLE, it maintains consistent performance with a near-constant average synchronisation latency of 25 seconds. However, it is more technically involved, requiring the initial ranging process to stabilise and potentially longer localisation times if many devices are present.

In comparative evaluations, UWB performed better in terms of reliability and robustness to environmental changes, while BLE was more accurate in anchor placement. UWB’s reference pose calculations demonstrated a mean error of 0.04 metres in position and 0.11 radians in orientation, which, while less precise than BLE, remains within an acceptable range for most applications.

The study also evaluates power consumption, scalability, and cost. BLE beacons (ESP32) consume more power than UWB beacons (DWM3001CDK), but the latter are more expensive to deploy. In terms of scalability, BLE is limited by map-saving conflicts and anchor lifespan, while UWB faces challenges in concurrent device ranging, although improvements in beacon hardware could address this.

In conclusion, the BLE-assist approach is better suited to short-term, high-precision AR experiences in relatively stable environments. UWB-assist is preferable for larger, more dynamic settings where consistent synchronisation is critical, even at the expense of slight positional inaccuracy and higher delay. The source code for this work is publicly accessible for further development.

Our take on this:

One point worth noting is that using the ESP32 as a beacon platform is not optimal in terms of power consumption. Employing dedicated hardware beacons designed specifically for this purpose would significantly reduce power usage. Moreover, such specialised hardware would offer a more compact and efficient form factor, with antenna configurations that are better suited for precise positioning and signal stability. This project shares similarities with our consultancy for Royal Museums Greenwich on the Cutty Sark.